Are machines capable of design? Though a persistent question, it is one that increasingly accompanies discussions on architecture and the future of artificial intelligence. But what exactly is AI today? As we discover more about machine learning and generative design, we begin to see that these forms of "intelligence" extend beyond repetitive tasks and simulated operations. They've come to encompass cultural production, and in turn, design itself.

When artificial intelligence was envisioned during the the 1950s-60s, the goal was to teach a computer to perform a range of cognitive tasks and operations, similar to a human mind. Fast forward half a century, and AI is shaping our aesthetic choices, with automated algorithms suggesting what we should see, read, and listen to. It helps us make aesthetic decisions when we create media, from movie trailers and music albums to product and web designs. We have already felt some of the cultural effects of AI adoption, even if we aren't aware of it.

As educator and theorist Lev Manovich has explained, computers perform endless intelligent operations. "Your smartphone’s keyboard gradually adapts to your typing style. Your phone may also monitor your usage of apps and adjust their work in the background to save battery. Your map app automatically calculates the fastest route, taking into account traffic conditions. There are thousands of intelligent, but not very glamorous, operations at work in phones, computers, web servers, and other parts of the IT universe." More broadly, it's useful to turn the discussion towards aesthetics and how these advancements relate to art, beauty and taste.

Usually defined as a set of "principles concerned with the nature and appreciation of beauty”, aesthetics depend on who you are talking to. In 2018, Marcus Endicott described how, from the perspective of engineering, the traditional definition of aesthetics in computing could be termed "structural, such as an elegant proof, or beautiful diagram." A broader definition may include more abstract qualities of form and symmetry that "enhance pleasure and creative expression." In turn, as machine learning is gradually becoming more widely adopted, it is leading to what Marcus Endicott termed a neural aesthetic. This can be seen in recent “artistic hacks”, such as Deepdream, NeuralTalk, and Stylenet.

Beyond these adaptive processes, there are other ways AI shapes cultural creation. Artificial intelligence has recently made rapid advances in the computation of art, music, poetry, and lifestyle. Manovich explains that AI has given us the option to automate our aesthetic choices (via recommendation engines), as well as assist in certain areas of aesthetic production such as consumer photography and automate experiences like the ads we see online. "Its use of helping to design fashion items, logos, music, TV commercials, and works in other areas of culture is already growing." But, as he concludes, human experts usually make the final decisions based on ideas and media generated by AI. And yes, the human vs. robot debate rages on.

According to The Economist, 47% of the work done by humans will have been replaced by robots by 2037, even those traditionally associated with university education. The World Economic Forum estimated that between 2015 and 2020, 7.1 million jobs will be lost around the world, as "artificial intelligence, robotics, nanotechnology and other socio-economic factors replace the need for human employees." Artificial intelligence is already changing the way architecture is practiced, whether or not we believe it may replace us. As AI is augmenting design, architects are working to explore the future of aesthetics and how we can improve the design process.

In a tech report on artificial intelligence, Building Design + Construction explored how Arup had applied a neural network to a light rail design and reduced the number of utility clashes by over 90%, saving nearly 800 hours of engineering. In the same vein, the areas of site and social research that utilize artificial intelligence have been extensively covered, and examples are generated almost daily. We know that machine-driven procedures can dramatically improve the efficiency of construction and operations, like by increasing energy performance and decreasing fabrication time and costs. The neural network application from Arup extends to this design decision-making. But the central question comes back to aesthetics and style.

Designer and Fulbright fellow Stanislas Chaillou recently created a project at Harvard utilizing machine learning to explore the future of generative design, bias and architectural style. While studying AI and its potential integration into architectural practice, Chaillou built an entire generation methodology using Generative Adversarial Neural Networks (GANs). Chaillou's project investigates the future of AI through architectural style learning, and his work illustrates the profound impact of style on the composition of floor plans.

As Chaillou summarizes, architectural styles carry implicit mechanics of space, and there are spatial consequences to choosing a given style over another. In his words, style is not an ancillary, superficial or decorative addendum; it is at the core of the composition.

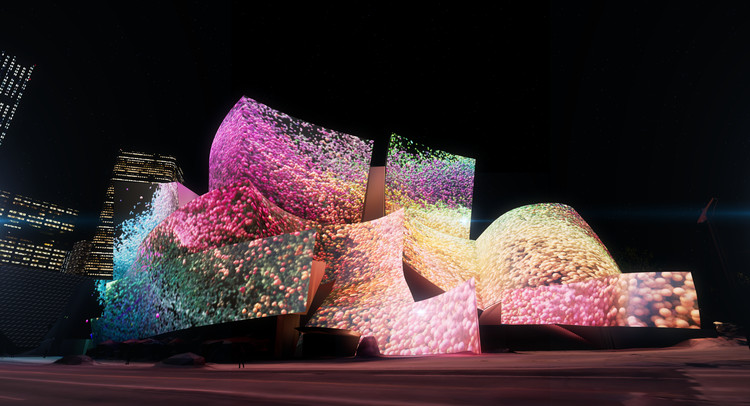

Artificial intelligence and machine learning are becoming increasingly more important as they shape our future. If machines can begin to understand and affect our perceptions of beauty, we should work to find better ways to implement these tools and processes in the design process.

Architect and researcher Valentin Soana once stated that the digital in architectural design enables new systems where architectural processes can emerge through "close collaboration between humans and machines; where technologies are used to extend capabilities and augment design and construction processes." As machines learn to design, we should work with AI to enrich our practices through aesthetic and creative ideation. More than productivity gains, we can rethink the way we live, and in turn, how to shape the built environment.

Editor's Note: This article was originally published on March 6, 2020, and updated on February 9, 2021.